CIFAR 10 - Image Classifier

- Roma Fatima

- Nov 2, 2021

- 2 min read

We are given an image classifier, CIFAR-10, here the input images are processed and classified under certain categories. Our objective is to improve the average accuracy of this image classification. I have improved the accuracy to 63% in this model.

First we begin by understanding the basic CIFAR-10 Image Classifier code obtained.

We start the program by loading the torch package which is helpful in loading and normalizing the training and test datasets.

Output:

Now we get some random training images visualized.

Output:

Now we design a Convolution Neural Network (CNN) with 2 convolution layers conv1 and conv2 and 3 fully connected layers fc1, fc2 and fc3.

Then we design a loss function and optimizer

Now we start training our model through iteration loop.

Output:

We save our trained model now.

We now test the trained data set as a trial.

Output:

We load the previous saved model.

Output:

Now the neural network classifies the data.

We select the index of highest energy.

Output:

Since the trial worked perfectly, we now test the whole data set. Here we find the accuracy of the whole network.

Output:

We also measure the performance of each class.

Output:

My Contribution:

Epoch refers to the number of times the model is iterated. As per the referenced blogpost I came across, it is possible to increase accuracy up-to 94% by increasing epoch to 50.

For my image classifier, I increased the epoch from 2 to 9. As a result, the accuracy came up to 63%.

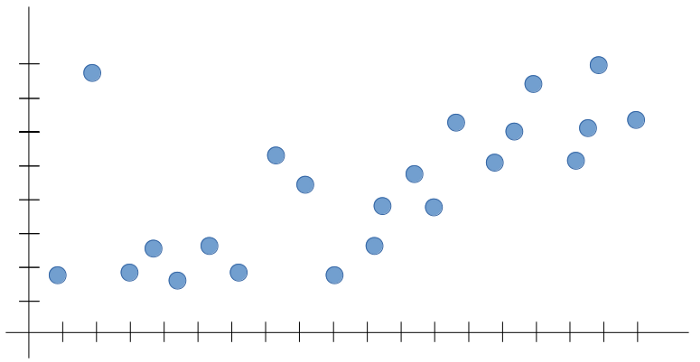

Output:

We compute the final accuracy now.

Output:

Challenges Faced:

The biggest challenge in running high number of epoch was that the execution time increased drastically. The epoch and the execution time was almost directly proportional.

Another challenge I faced was overfitting. After a certain point the accuracy went down and the model wasn't usable anymore. Even though the referenced blogpost suggested that epoch 50 can give accuracy of 94%, it did not work on my model due to overfitting.

Experiments:

I tried to increase the convolution layers from 2 to 3, 4, 5, 6 and even 7, with self-pooling, to improve the accuracy.

Unfortunately, changing these hypermeters wasn't very effective as the maximum accuracy I could reach was 56% in all the 6 trials.

References:

Reference for the code and it's explanation of the CIFAR-10 Image Classifier: https://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html#sphx-glr-beginner-blitz-cifar10-tutorial-py

Reference for the epoch value changing in the section of 'My Contribution': https://blog.fpt-software.com/cifar10-94-of-accuracy-by-50-epochs-with-end-to-end-training

Reference for the image of the blog: https://www.cs.toronto.edu/~kriz/cifar.html

Comments